We’ve always said Boot.dev is fun… but we’ve never said it’s easy.

Subscribe to my YouTube channel if this video was helpful!

I mean, it’s not for lack of trying. We do everything we can to make the content as easy to understand as it can be:

- We make meticulous updates to the explanations and lessons based on user feedback

- We have an AI assistant (Boots) that helps you when you get stuck

- We provide solutions to every challenge if you’re still stuck

What we won’t do, is not teach the hard stuff. Some traditional bootcamps and e-learning platforms simply avoid the problem altogether by pretending that you don’t need to learn hard things… we don’t like that. That has the side-effect of flooding the market with lower-skill developers who expect to land jobs they aren’t qualified for.

So we’ve never been interested in that.

Boot.dev takes time, and yes, at times the courses are hard. But there’s not much worth doing in life that’s easy, right?

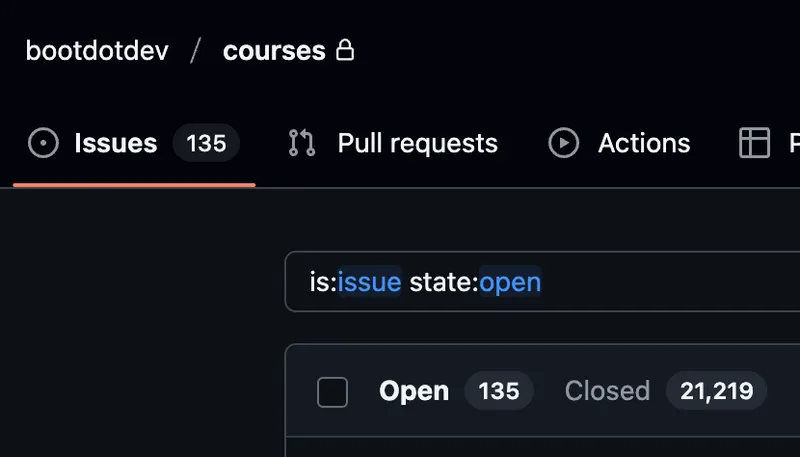

Okay so what do I mean by “hard”? Well, its not like the courses skip stuff or explain it poorly. I know that’s a bold claim, just know that we at least try our hardest by spending a lot of time tweaking the content and making micro-quality-updates based on your reports:

We’ve handled 21,219 reports on lessons!

So no, when I talked about “hard” I’m talking about a lack of practice. That’s where we’ve failed.

For example, say you’re learning about functions in Python, you:

- Read the explanation

- Watch the video

- Start the challenge.

- Get stuck

- Chat with Boots (AI assistant)

- Complete it with his helpful nudges

Great. You solved it. But are you really ready to move on to the next concept?

You got help with functions… so it would be nice if you could now practice functions again, and hopefully complete it this time without help?

Well, pre-training grounds, you only had two options:

- Reset the lesson and do it again - but that sucks because you already know the answer to that one

- Forge ahead to the next concept and challenge - but now you’re building on top of a shaky foundation.

So it can feel like everything is moving really fast. And the answer to the problem?

More practice challenges of course!

But that’s easier said than done.

We have over 2,500 hand-crafted lessons and their associated challenges on Boot.dev, so to add just 2 more optional challenges to each lesson would mean we’d need to write 5,000 more lessons by hand. That’s a lot.

But the worst part about doing that is that most students don’t need all 5,000. They only need a few extra practice challenges on specific lessons. Which lessons? That varies wildly from student to student.

So even if we did all that work (which would mean releasing fewer new courses) we’d still have some concepts with not enough practice, and many with more than anyone needed.

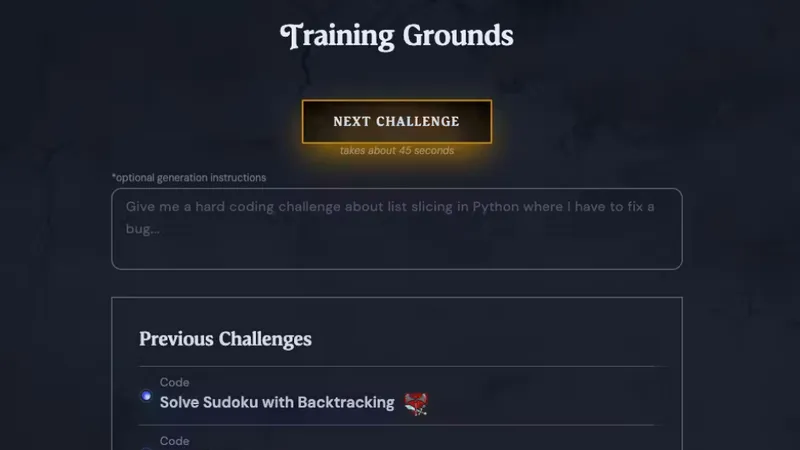

All this to say… we launched The Training Grounds today, and it solves this problem!

Of course it’s 2025 so obviously it’s an AI thing, but I swear it’s not just one shot slop! We’ve been working on this system for quite a while now, and a lot goes into it. It works like this:

- You click “Next Challenge”

- The system looks at:

- What courses you’ve completed

- What topics you’ve learned recently

- What you’ve been struggling with

- What might need to be reviewed based on time elapsed (spaced repetition)

- Based on all that it selects a topic, a difficulty, and a challenge type, and other metadata that will constitute a “good challenge” for you

- It sources examples from our existing hand-written challenges that match the type of challenge it’s trying to create

- It creates a new challenge from scratch (GPT5/Claude Sonnet 4 at time of writing), using those examples as inspiration

- It runs the challenge on our backend to ensure it’s valid and has a correct solution

- It makes any updates based on the execution results

- It serves it to you in the Training Grounds

Now, you do occasionally get a really dumb AI-slop challenge. Cost of doing business with AI I guess.

But not very often - we’ve been working really hard to fine-tune the system. And as we get more and more skip/rating feedback into the system, we should be able to ship some big quality improvements over the next few months.

And of course you can skip a challenge at any time and provide a reason, and the system will see that note and generate fewer challenges like that for you in the future.

Now the biggest drawback at the moment is that we’ve been entirely focused on the quality of challenges - so… it’s kinda slow. It takes about 45 seconds to generate a new challenge. That’s a limitation of how quickly our backing LLMs can output the tokens to write the challenges. Thinking tokens are slow and expensive, but the quality is so much better that it’s worth it.

That said we have some ideas for updates over the next couple months that will make challenge assignment a lot faster - things like reassigning already generated 5-star challenges across students, and pregenerating challenges in the background.

So anyways, here’s the link to the Training Grounds if you want to check it out!