The age of information is not what we all hoped it would be. We successfully digitized the majority of human knowledge, and we even made it freely accessible to most. Now the problem is different, we have too much information. Answers to most questions can be found in thousands of distinct places online, and the new problem is “whos information can we trust?”

What platforms think they should do about fake news 🔗

Twitter and Facebook have recently been under scrutiny for their censorship of coronavirus-related misinformation. For example, a video claiming Hydroxychloroquine is a Corona cure recently went viral on Facebook, and the video keeps getting taken down. The video contains some wild assertions, made by Stella Immanuel, who also happens to believe that gynecological problems are the result of spiritual relationships.

By removing content they believe to be dubious, Twitter and Facebook have made themselves arbiters of truth. Anecdotally, all the posts I’ve seen them remove HAVE contained misinformation, but the fact remains… these platforms have become self-appointed authorities on the veracity of our information.

This is a problem.

So we can’t censor? 🔗

We certainly can, and we certainly should in some cases. Let’s get some obvious ones out of the way:

- Child Pornography

- Death Threats

- Doxing

There may be some other clear examples where censoring is unquestionably the right choice, though I doubt there are many. Let’s look at some more controversial examples:

- Hate Speech

- Misinformation

I would posit that here the answer is contingent on who is doing the censoring. While hate speech and misinformation are disgusting, I don’t want a government deciding what is hate speech, or deciding what is truth.

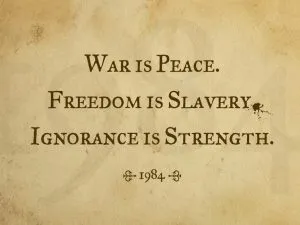

George Orwell, 1984

That said, I certainly want an online system where hate speech and misinformation are effectively filtered out of the conversation. Ideally, every online participant would be a virtuous, educated, and concerned conversationalist. If that were the case, undesirable posts would effectively be ignored due to not receiving the likes, shares, upvotes, and comments they need to spread.

In reality, we can’t take such a pacifistic approach. We need to protect our gardens a bit more.

What should social networks do about misinformation? 🔗

The steps platforms take are more important than what users do on a personal level to combat misinformation. Platforms can directly affect user behavior patterns that result in more accurate information being circulated through combinations of UI, UX, tooling, and even moderation. As we’ll see in the next section, all individual users can do is try to account for their own biases and look to primary sources.

All online platforms are responsible for the tools they provide for moderation, if not for the moderation itself.

Social platforms should:

- Remove dangerous content such as doxing, threats, child trafficking, etc

- Provide tools that allow users to “unlike” or “unfollow” content, even if the unlikes aren’t visible

- Attempt to determine whether a user is saying “I don’t agree” vs “this is factually incorrect”

- Posts reported as factually incorrect should have their reach punished, and potentially even be marked as dubious

- Users should probably see more of the content they don’t agree with. Platforms should attempt to break down echo chambers of thought.

Social platforms should not be eager to:

- Remove misleading content

By removing misleading content, platforms run the risk of fueling an argumentum ad martyrdom mentality. Removing information can have an adverse effect, causing people to suspect there is a nefarious reason for removing it.

But the fact that some geniuses were laughed at does not imply that all who are laughed at are geniuses. They laughed at Columbus, they laughed at Fulton, they laughed at the Wright brothers. But they also laughed at Bozo the Clown.

- Carl Sagan, Probably

What should users do about misinformation? 🔗

As I mentioned before, this is just a point of educating the userbase. The behaviors below are just good rules of thumb for anyone as a consumer of information. That said, platforms should do more to overtly educate their users about these kinds of critical thinking skills, and even encourage users to put them into practice via reminders or tactful in-app messaging.

Users should:

- Read entire articles before liking, sharing, or commenting

- Deploy extra skepticism in regards to information with a clear political or monetary agenda

- Attempt to be self-aware about their preconceived notions and confirmation biases

- Look for the primary source of information

- Ensure information is up-to-date by checking publishing timestamps

Users should not:

- Reward clickbait titles with engagement

- Exclusively follow, subscribe, or search for content that aligns with their current beliefs

- Assume their position is valid because people are trying to remove their content

- Trust articles and posts coming from sites that appear unsafe