Prehistory 🔗

The Antikthyera Mechanism

For as long as humans have needed to count, they have endeavored to find ways to make that process simpler. The abacus, first invented in Sumer sometime between 2700 and 2300 BCE, was more a rudimentary counting assistant than an actual computer. It did, however, represent the first stride humans took towards using tools to assist with mathematics. Much later, around 100 BC, the Antikythera Mechanism would be used to calculate astrological positions, for the purpose of sea travel. Unlike the abacus, it is likely that the mechanism was, in fact, the earliest form of an analog computer. As time went on, analog machines such as this would be used to keep track of the stars, and eventually, to keep track of time.

Industrial Revolution 🔗

Like many things, the Industrial Revolution proved necessary to catalyze an iteration of the lessons of Computer Science and transform it into something new. Though Gottfried Wilhelm Leibniz had developed the basic logic for binary mathematics in 1702, it would take over a century and the efforts of George Boole to transform it into a complete, mathematically modeled system. He developed his Boolean Algebra in 1854. Using this binary pattern, mechanical devices could use punch cards or other binary methods to accomplish tasks that had originally fallen onto human hands. Alongside the development of binary, in 1810, Charles Babbage and Ada Lovelace developed the theory for the “Analytical Engine” and the first computer algorithm, respectively. While these strides are not to be taken lightly, they were entirely theoretical. They would, however, lay the groundwork for the future of computation devices.

1930 - 1960 🔗

In 1936, Alan Turing published his work on the Turing Machines, the first abstract digital computer. This machine is the basis for all modern computers and introduced the concept of the stored program. While at the time this machine was hypothetical, it would not be long before it became a reality, with nearly all modern programming languages touting the turning complete label. Helping this process along, Akira Nakashima’s switching circuit theory helped pave the way for the use of binary in digital computers. His work became the basis for circuit design going forward, especially after the theory became known to electrical engineers during World War II.

Alan Turing

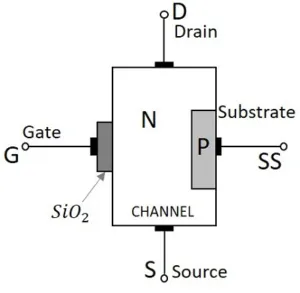

The first digital computer, the Atanasoff-Berry Computer, was built on the Iowa State campus between 1939 and 1942. While this was the first, only 8 years later, Britain’s National Physical Laboratory completed the ACE, a small, programmable computer with an operating speed of 1 MHz. Certainly pittance by today’s standards, but at the time, ACE held the title for the fastest computer in the world. Alongside these discoveries, Bell Labs built the first working transistor. This earned them the Nobel Prize in Physics in 1953, and their design was used by governments and militaries for specialized purposes. Bell Labs then went on the produce MOSFET (Metal-Oxide-Silicon Field-Effect Transistor) in 1959, which was the first miniaturized transistor that was mass-produced for a wide variety of applications. The MOSFET would lead to the microcomputer revolution and is a fundamental foundation of digital electronics.

1961- Present 🔗

Following the development of the MOSFET, the idea of a personal computer became more and more appealing. The issue was, most computers were prohibitively expensive, and used almost exclusively by governments, corporations, militaries, and universities. That was, until the invention of the Microprocessor. While it had been possible for some time to develop a microcomputer, the processors still require several large circuit boards to function. However, once it was found that the processor could be placed on a single, integrated circuit - thanks to MOSFET - it was able to be commercialized. In 1972, the introduction of the Intel 4004 caused the cost of Microprocessors to decline rapidly, and the stage was set for personal computers to become a household item.

MOSFET Diagram

The development of computers began to proceed at a rapid pace. From 1980 to 2000, several dozen advancements were made in compression, miniaturization, portability, and manufacturing. By 1991, the World Wide Web became publicly accessible, leading to the sharing of information like never before. By 2000, nearly every country had a connection to the Web and nearly half the people in the United States used the internet regularly. Personal Computers became ubiquitous, followed shortly by cell phones, and to keep up with the demand of an increasingly technological world, those specializing in Computer Science came to be much sought after.

With the development of cloud technology, artificial intelligence that makes the use of advanced heuristic modeling, machine learning, and other modern breakthroughs it’s becoming clear that computer science will continue to develop exponentially as time goes on. The strides made in the last 30 years far outstrip those made in the previous 100, and those outstrip those made in the previous 4000. As technology improves, it remains to be seen where Computer Science will take us next.